Linear Regression

Linear regression is a statistical modeling technique that seeks to establish a linear relationship between a dependent variable and one or more independent variables.

Goals

By the end of this lesson, you should be able to:

- Write cost function of linear regression.

- Implement Gradient Descent algorithm for optimisation.

- Train linear regression model using gradient descent.

- Evaluate linear regression model using

r^2and mean-squared-error. - Evaluate and choose learning rate.

- Plot cost function over iteration time.

- Plot linear regression.

linear regression, cost function, hypothesis, coefficients, update function, gradient descent, matrix form, metrics, mean squared error, coefficient of determination, train

Introduction

Linear regression is a machine learning algorithm dealing with a continuous data and is considered a supervised machine learning algorithm. Linear regression is a useful tool for predicting a quantitative response. Though it may look just like another statistical methods, linear reguression is a good jumping point for newer approaches in machine learning.

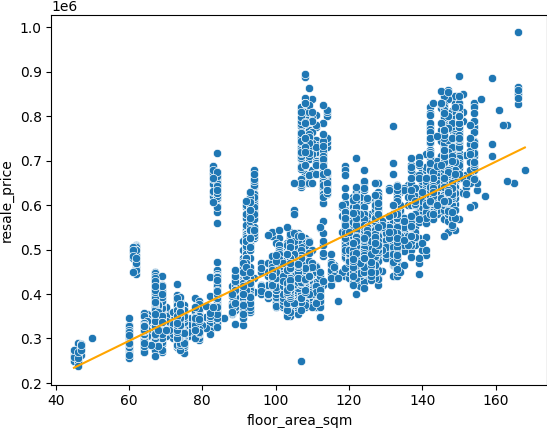

In linear regression we are interested if we can model the relationship between two variables. For example, in a HDB Resale Price dataset, we may be interested to ask if we can predict the resale price if we know the floor size. In the simplest case, we have an independent variable and a dependent variable in our data collection. The linear regression algorithm will try to model the relationship between these two variables as a straight line equation.

In this sense, the model consists of the two coefficients and . Once we know these two coefficients, we will be able to predict the value of for any .

In this sense, the model consists of the two coefficients and . Once we know these two coefficients, we will be able to predict the value of for any .

Hypothesis

We can make our straight line equation as our hypothesis. This simply means we make a hypothesis that the relationship between the independent variable and the dependent variable is a straight line. To generalize it, we will write down our hypothesis as follows.

where we can see that is the constant and is the gradient . The purpose of our learning algorithm is to find an estimate for and given the values of and . Let's see what this means on our actual data. Recall that we have previously work with HDB Resale price dataset. We will continue to use this as our example. In the codes below, we read the dataset, and choose resale price from TAMPINES and plot the relationship between the resale price and the floor area.

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import numpy as np

file_url = 'https://www.dropbox.com/s/jz8ck0obu9u1rng/resale-flat-prices-based-on-registration-date-from-jan-2017-onwards.csv?raw=1'

df = pd.read_csv(file_url)

df_tampines = df.loc[df['town'] == 'TAMPINES',:]

sns.scatterplot(y='resale_price', x='floor_area_sqm', data=df_tampines)

We can easily notice that resale price increases as the floor area increases. So we can make a hypothesis by creating a straight line equation that predicts the resale price given the floor area data. The figure below shows the plot of a straight line and the existing data together.

y = 52643 + 4030 * df['floor_area_sqm']

sns.scatterplot(y='resale_price', x='floor_area_sqm', data=df_tampines)

sns.lineplot(y=y, x='floor_area_sqm', data=df_tampines, color='orange')

Note that in the above code, we created a straight line equation with the following coefficients:

and

In machine learning, we call and as the model coefficents or parameters. What we want is to use our training data set to produce estimates and for the model coefficients. In this way, we can predict future resale prices by computing

where indicates a prediction of . Note that we use the hat symbol to denote the estimated value for an unknown parameter or coefficient or to denote the predicted value. The predicted value is also called a hypothesis.

Try out the code yourself here:

Cost Function

In order to find the values of and , we will apply optimization algorithm that minimizes the error. The error caused by the difference between our predicted value and the actual data is captured in a cost function. Let's find our cost function for this linear regression model.

We can get the error by taking the difference between the actual value and our hypothesis and square them. The square is to avoid cancellation due to positive and negative differences. This is to get our absolute errors. For one particular data point , we can get the error square as follows.

Assume we have data points, we can then sum over all data points to get the Residual Sum Square (RSS) of the errors.

We can then choose the following equation as our cost function.

The division by is to get an average over all data points. The constant 2 in the denominator is make the derivative easier to calculate.

The learning algorithm will then try to obtain the constant and that minimizes the cost function.

Gradient Descent

One of the algorithm that we can use to find the constants by minimizing the cost function is called gradient descent. The algorithm starts by some initial guess of the constants and use the gradient of the cost function to make a prediction where to go next to reach the bottom or the minimum of the function. In this way, some initial value of and , we can calculate the its next values using the following equation.

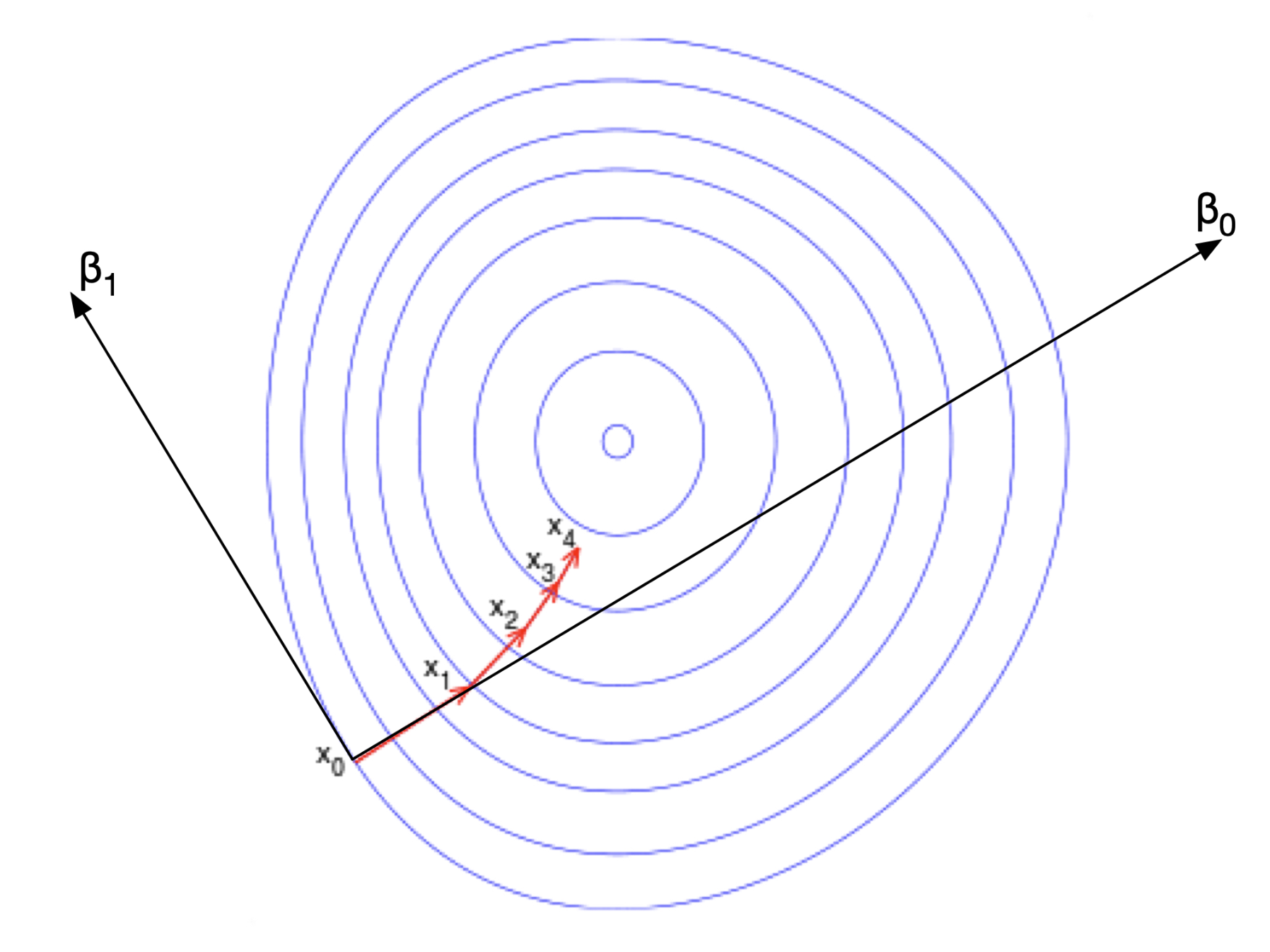

In order to understand the above equation, let's take a look at a two countour plot below.

The contour plot shows the minimum somewhere in the centre. The idea of gradient descent is that we move the fastest to the minimum if we choose to move in the direction with the steepest slope. The steepest slope can be found from the gradient of the function. Let's look at point in the figure. The gradient in the direction of is non zero as can be seen from the contour since it is perpendicular to the contour lines. On the other hand, the gradient in the direction of is zero as it is parallel with the contour line at . Recall that contour lines show the points with the same value. When the points have the same values, the gradient is zero. We can then substitute this into the above equation.

The partial derivative with respect to is non-zero while the derivative with respect to is zero. So we have the following:

where is the gradient in direction which is the partial derivative . If the optimum is a minima, then , a negative value. Now, we can see how the next point increases in direction but not in direction at .

When the gradient in the direction is no longer zero as in the subsequent steps, both and increases by some amount.

We can actually calculate the derivative of the cost function analytically.

We can substitute our straight line equation into to give the following.

Now we will differentiate the above equation with respect to and .

Let's first do it for .

or

Now, we need to do the same by differentiating it with respect to .

or

Now we have the equation to calculate the next values of and using gradient descent.

Matrix Operations

We can calculate these operations using matrix calculations.

Hypothesis

Recall that our hypothesis (predicted value) for one data point was written as follows.

If we have data points, we will then have a set of equations.

We can rewrite this in terms of matrix multiplication. First, we write our independent variable as a column vector.

To write the system equations, we need to add a column of constant 1s into our independent column vector.

and our constants as a column vector too.

Our system equations can then be written as

The result of this matrix multiplication is a column vector of . Note that in the above matrix equation, we use to denote the column vector of predicted value or our hypothesis.

Cost Function

Recall that the cost function is written as follows.

We can rewrite the square as a multiplication instead and make use of matrix multplication to express it.

Writing it as matrix multiplication gives us the following.

Gradient Descent

Recall that our gradient descent equations update functions were written as follows.

And recall that our independent variable is a column vector with constant 1 appended into the first column.

Transposing this column vector results in

Note that we can write the update function summation also as a matrix operations.

Substituting the equation for , we get the following equation.

In this notation, the capital letter notation indicates matrices and small letter notation indicates vector. Those without bold notation are constants.

Metrics

After we build our model, we usually want to evaluate how good our model is. We use metrics to evaluate our model or hypothesis. To do this, we should split the data into two:

- training data set

- test data set

The training data set is used to build the model or the hypothesis. The test data set, on the other hand, is used to evaluate the model by computing some metrics.

Mean Squared Error

One metric we can use here is called the mean squared error. The mean squred error is computed as follows.

where is the number of predicted data points in the test data set, is the actual value in the test data set, and is the predicted value obtained using the hypothesis and the independent variable in the test data set.

R2 Coefficient of Determination

Another metric is called the coefficient or the coefficient of determination. This is computed as follows.

where

where is the actual target value and is the predicted target value.

where

and is the number of target values.

This coefficient gives you how close the data is to a straight line. The closer it is to a straight line, the value will be close to 1.0. This means that the straight line model fits the data pretty well. When the value is close to 0. This means that the straight line model does not describe the data well.

In your problem sets, you will work on writing a code to do all these tasks.